A rule-based system works very reliably under optimal inspection conditions and with constant parts. The situation is different if the parts come out of the machine slightly differently from batch to batch, but are still OK parts. Recalibration can then quickly become time-consuming and costly. It's a different story with AI-based systems like the Maddox AI system. This system can learn that parts can look different and still be OK parts.

Usability - Can I also Operate Maddox AI?

Episode 7

Author: Hanna Nennewitz

Usability - Can I also Operate Maddox AI?

Episode 7

Author: Hanna Nennewitz

In episode 5, Peter Droege, CEO and co-founder of Maddox AI, explained that one problem with visual quality control today is the lack of usability of rule-based systems. Their operation is not intuitive, often requiring professional personnel to maintain the systems. Alongside this, a survey by Maddox AI found that many companies are reluctant to adopt AI-based systems for quality control because they assume they don’t have the expertise to train AI systems in-house. If you’d like to take a closer look at the survey, you can do so here. Maddox AI’s system, on the other hand, Peter explained to me, is supposed to be quite easy to use even for non-experts, and that’s what I’m going to try out in this episode. To do this, I will try to get both the AI-based Maddox AI system and the Halcon industrial image processing program from mvtec to work.

Maddox AI

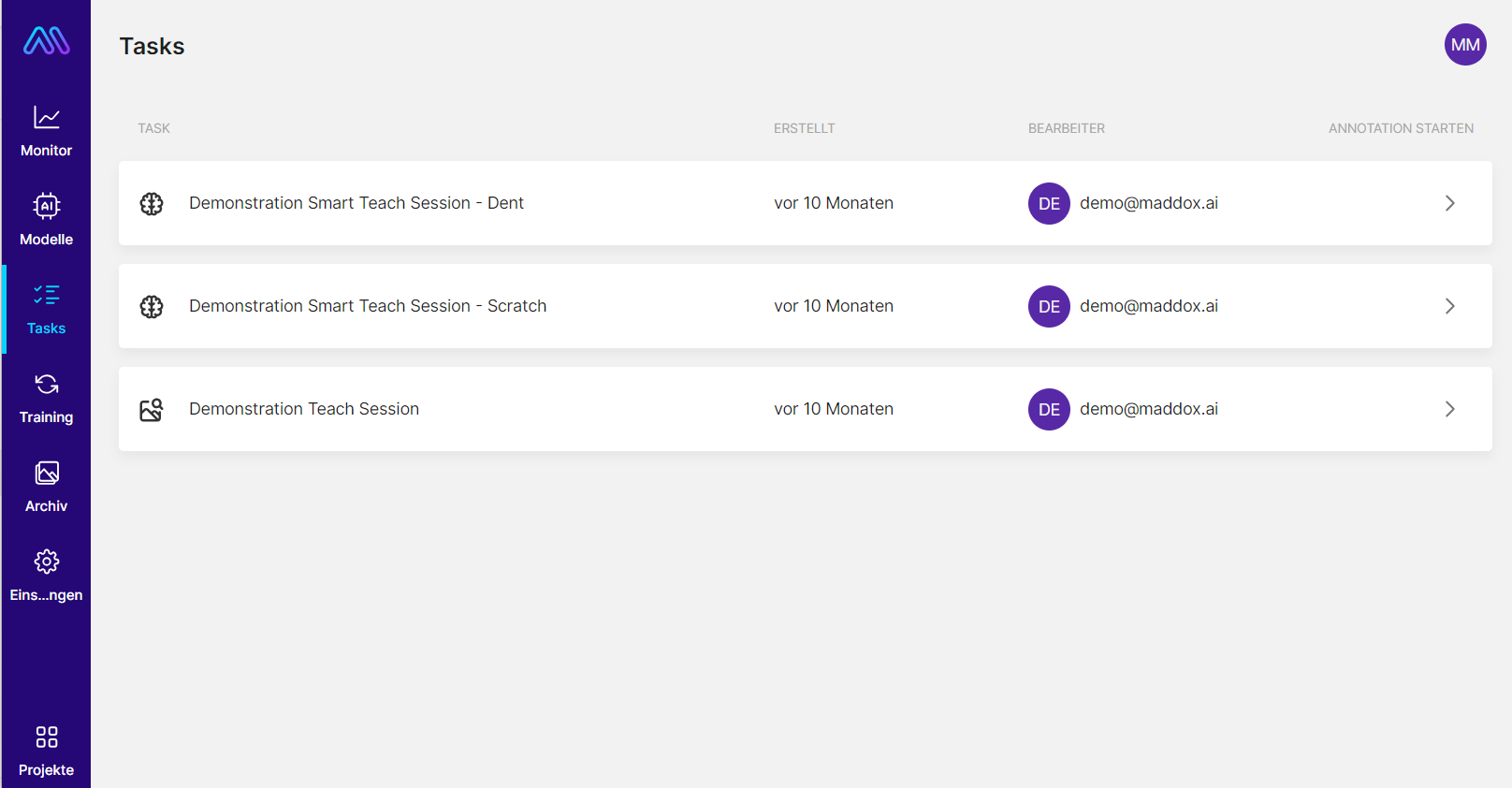

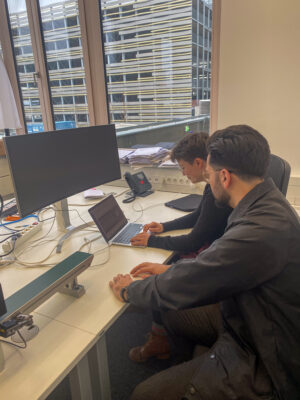

The first thing I do is test Maddox AI. During a short training session, Behar Veliqi, CTO and co-founder of Maddox AI, shows me how I can use Maddox AI. Behar explains to me that all the tasks I need to do as a Maddox AI customer can be found in the cloud software under the “My Tasks” tab. Then he explains that one basically follows a three-step process when training a model with Maddox AI:

The first thing I do is test Maddox AI. During a short training session, Behar Veliqi, CTO and co-founder of Maddox AI, shows me how I can use Maddox AI. Behar explains to me that all the tasks I need to do as a Maddox AI customer can be found in the cloud software under the “My Tasks” tab. Then he explains that one basically follows a three-step process when training a model with Maddox AI:

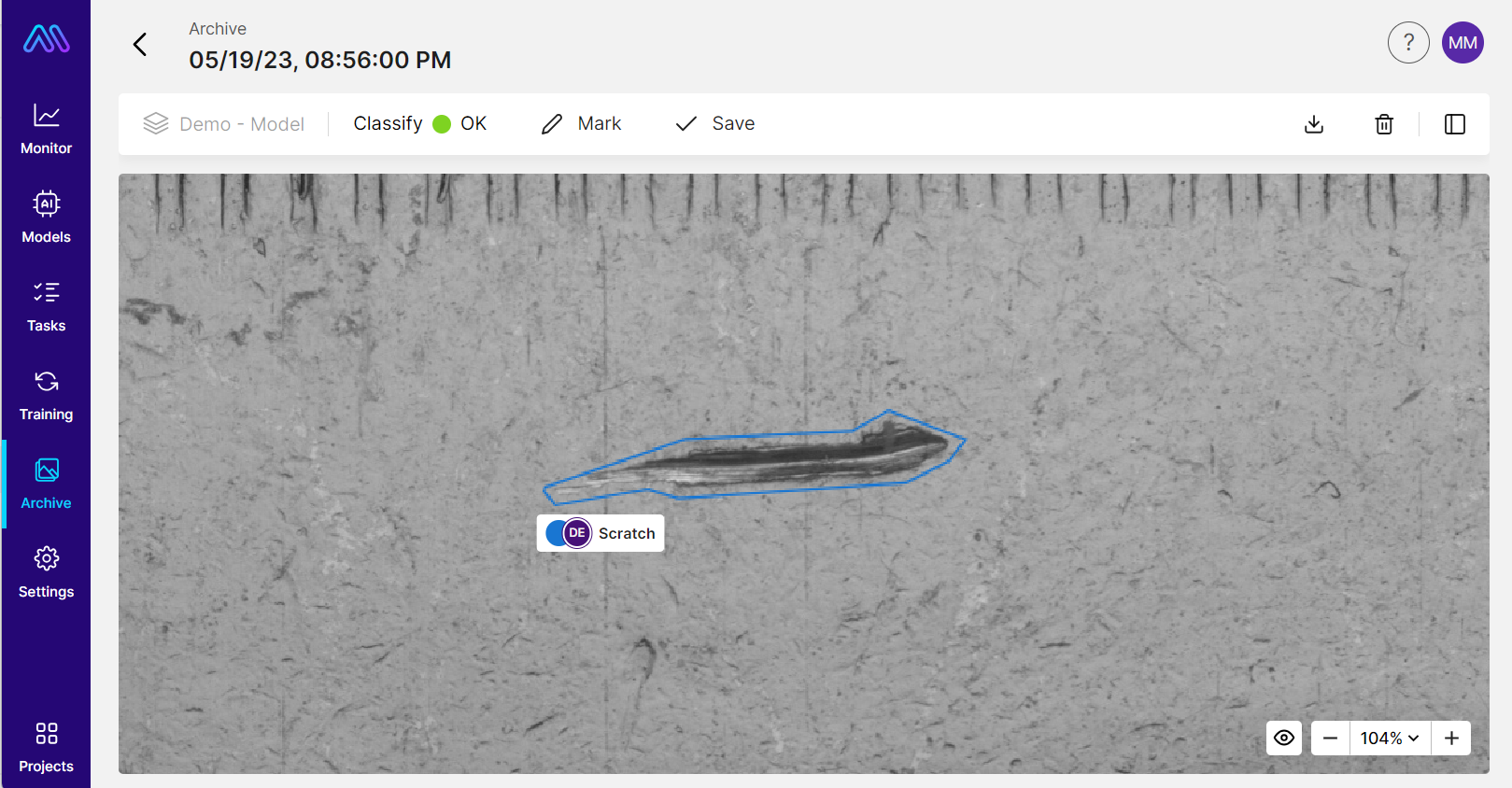

The first step is to mark polygons around the defects on the captured images. The number of images to be annotated varies depending on the use case, so sometimes 5-10 and other times up to 100 images need to be evaluated per defect class. In the second step, the annotated images can be used to start a first training of the AI model. In the third step the fine tuning is done. This involves comparing the model predictions with the human annotations and making any necessary adjustments.

The following video explains the process with the single three steps in more detail:

The first thing I do is test Maddox AI. During a short training session, Behar Veliqi, CTO and co-founder of Maddox AI, shows me how I can use Maddox AI. Behar explains to me that all the tasks I need to do as a Maddox AI customer can be found in the cloud software under the “My Tasks” tab.

Then he explains that one basically follows a three-step process when training a model with Maddox AI:

The first step is to mark polygons around the defects on the captured images. The number of images to be annotated varies depending on the use case, so sometimes 5-10 and other times up to 100 images need to be evaluated per defect class. In the second step, the annotated images can be used to start a first training of the AI model. In the third step the fine tuning is done. This involves comparing the model predictions with the human annotations and making any necessary adjustments.

The following video explains the process with the single three steps in more detail:

After the short training by Behar, I now go into the cloud application of Maddox AI on my own and start with the “My Tasks” tab.

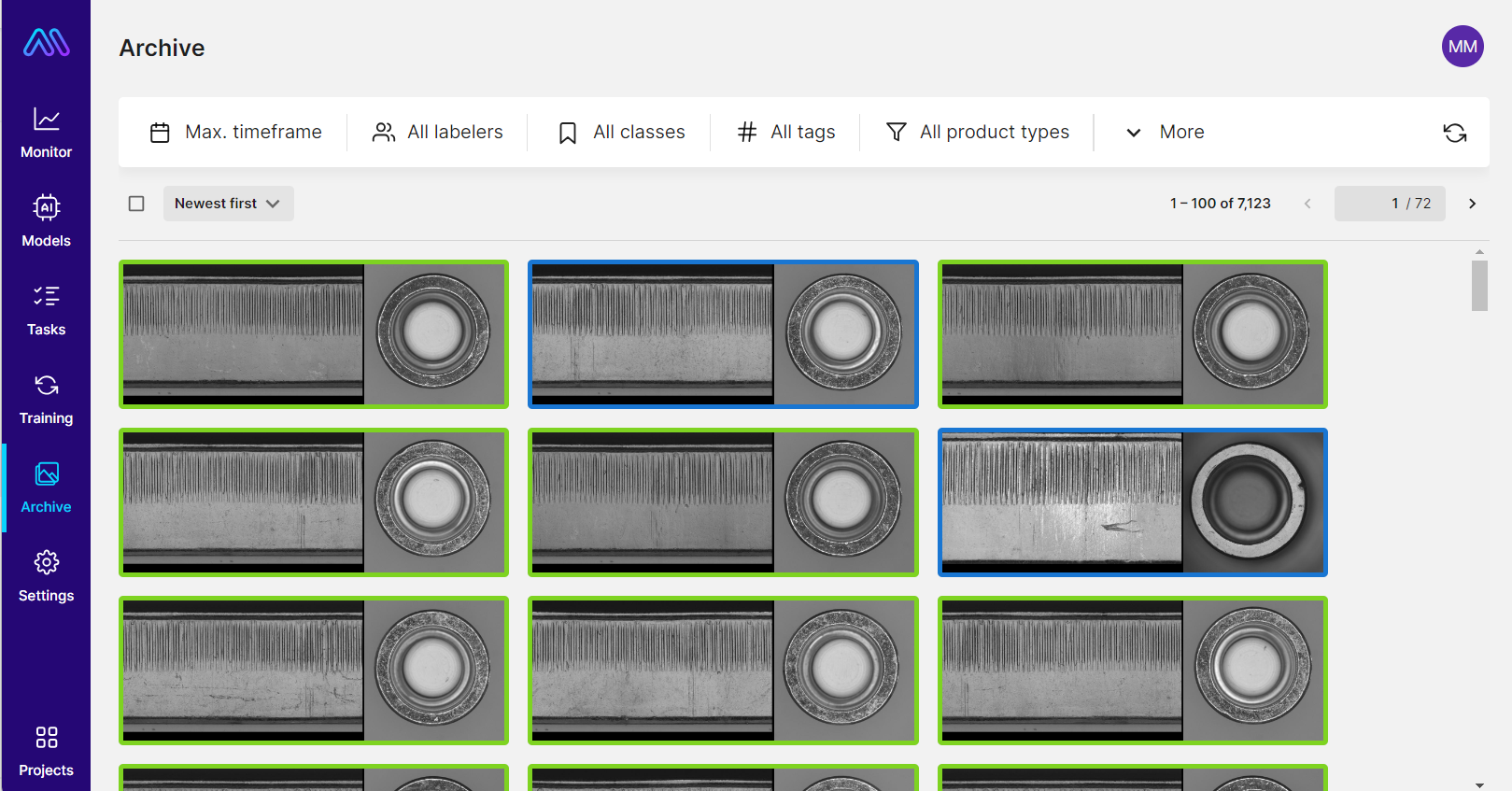

Here I find an already set task with the title “Teach Session”. I had given the Maddox AI team parts in advance for which I would like to create an example quality control model. These were recorded by the Maddox AI team and automatically uploaded to the cloud. My task now is to draw in the defects as accurately as possible with polygons and select whether it is a scratch or an impact. I had previously set this as my project-specific defect classes under “Settings”.

After I have completed the teach session, all annotated images are automatically saved in the so-called defect book. The defect book is the basis for training the AI model.

I can now train the Maddox AI system using the images stored in the defect book.

After the initial training is complete, I check the performance of the AI model by comparing the model predictions with the human annotations. Here, I simply go back to the “My Tasks” tab and open a so-called “Smart Teach Session”, which the AI creates automatically. In the Smart Teach Session, both my annotations and the model predictions are displayed to me. Of particular interest, of course, are the images where the model prediction differs from my estimate. There are two reasons for this:

The model has marked an area as an error that is actually OK. In order to avoid pseudo rejection in the future, I can now give the model feedback again that it has made an error and that the area is in truth to be evaluated as OK.

The model has detected an error that I forgot to annotate. In this case, I should also mark the error with a polygon to ensure that the data basis for the AI model is optimal. This is important because an optimal database can greatly influence the performance of my model. You can read more about the influence of the data base on model performance in episode 4.

After completing the Smart Teach Session, I train the model again.

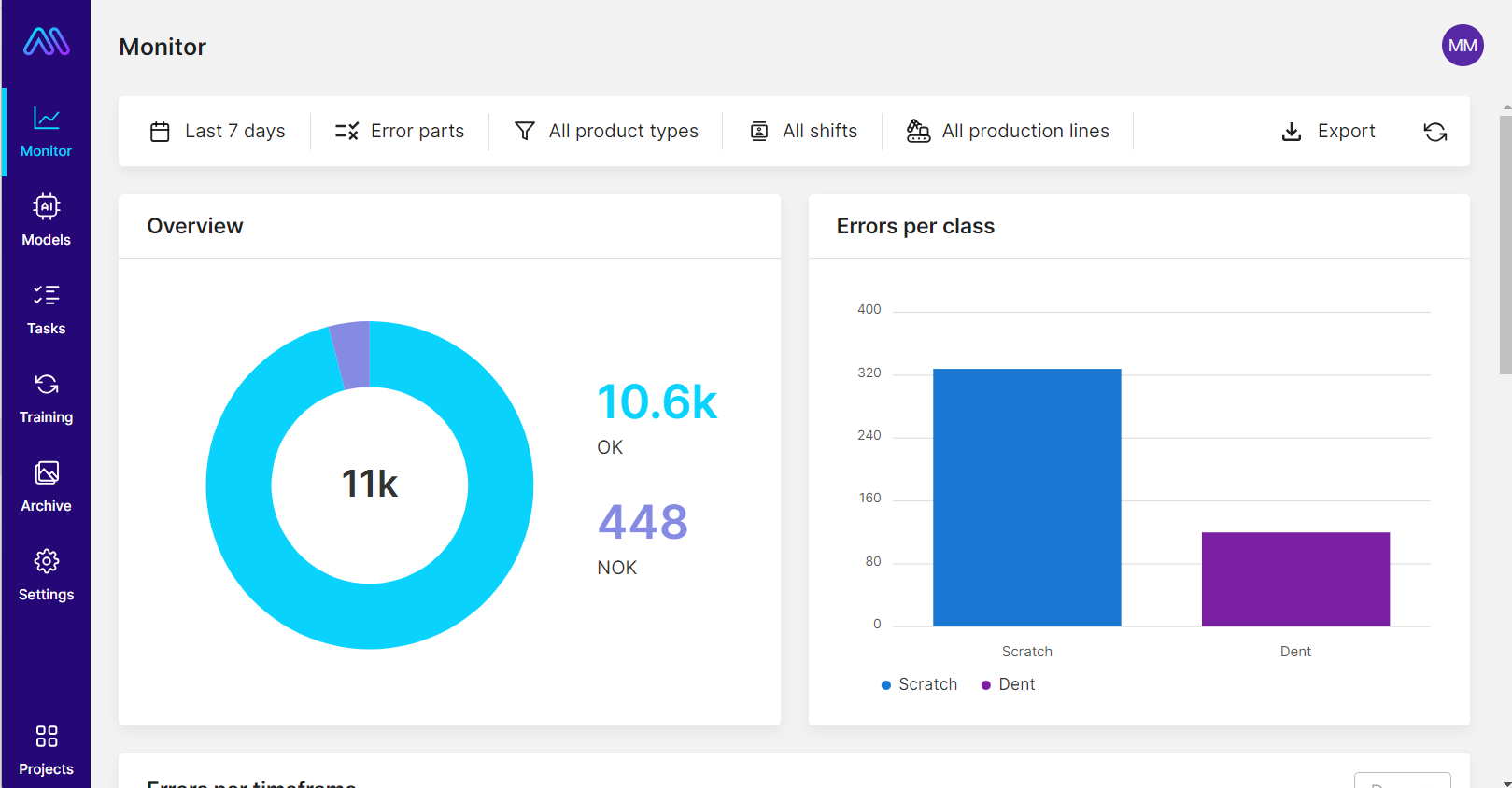

I repeat this ping-pong game between human and model once more, then the model has reached the desired recognition accuracy of 98%. I can then deploy the model and play it on the local industrial PC. This also works with a simple click in the interface. And it works, I’m quite surprised myself how easy it was.

The whole process took me about two hours. Under Monitor, I can now see what the quality of my mini-production looks like. I can see how many OK and NOK parts are produced and which defect class occurs most frequently.

My conclusion: The operation of the Maddox AI software is intuitive and was also easy for me to understand. Even as an absolute layperson, I was able to train and optimize my own model after a short time.

The Rule-Based System

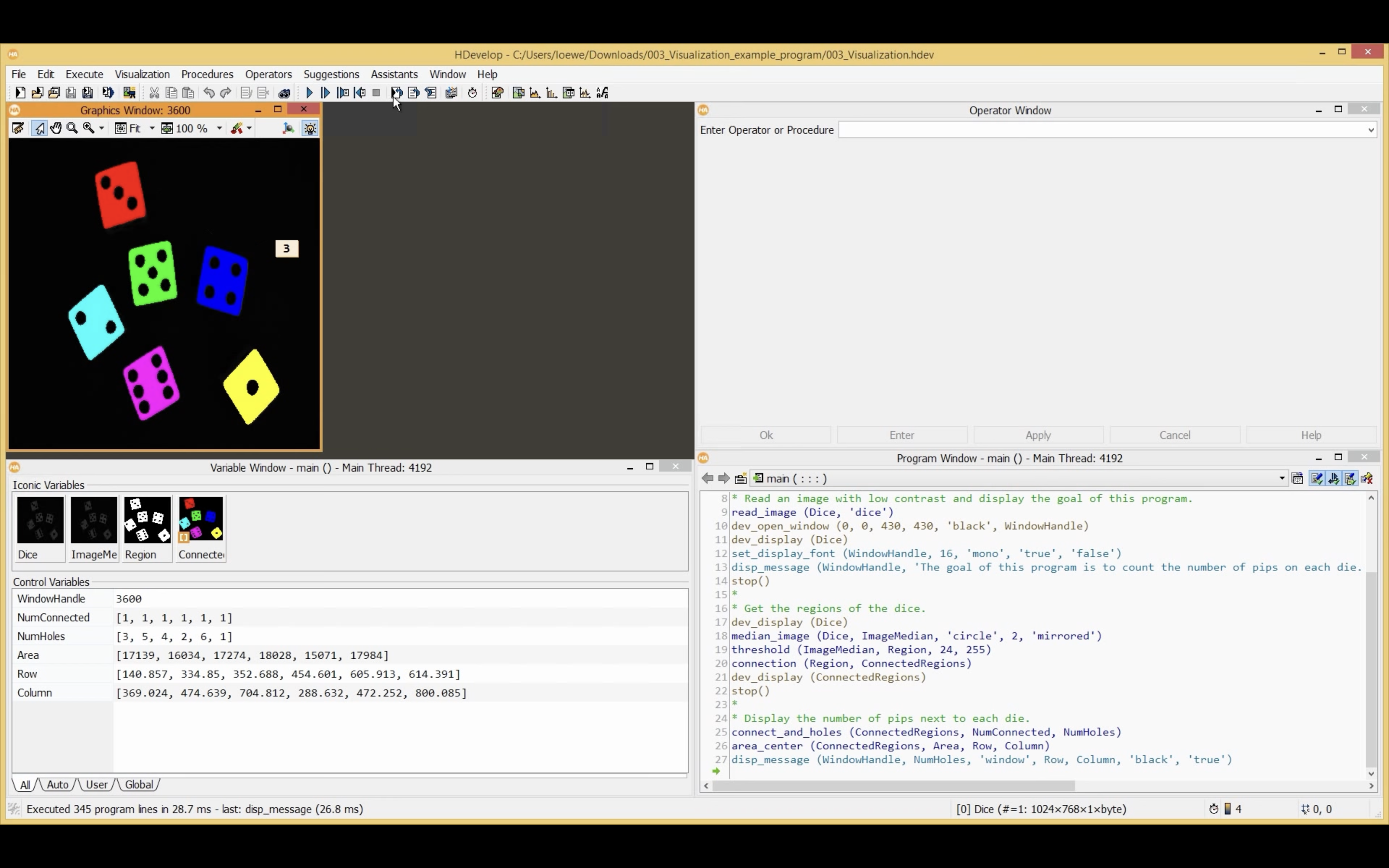

Now to the rule-based system Halcon from mvtec. Here Daniel Leib was at my side to help me. Daniel works at Maddox AI in hardware development and has already worked with Halcon during his mechatronics studies at Reutlingen University. I also meet him for a short training session on the system. First he explains to me the different windows and functionalities that can be seen. So far so good, with Maddox AI I had to get to know the different tabs and their functions as well. For this, however, we need a little longer than with Maddox AI, because at the beginning I don’t really understand what the difference between some of the windows is. After I feel I have at least understood the basic functions, we move on. Daniel uploads a photo of one of the parts to be tested and shows me that in order to program a test task, I need to write my own code in the “Program Window”. After I think I understand this, Daniel explains some more aspects of the programming language that I need to know in order to use Halcon.

After our meeting, I set to work on the program myself. First I make myself aware of the meaning of the different windows and then decide to upload a photo to the application. But here I encounter difficulties already. I find it difficult to judge which of the taken pictures is well suited to create a test task and therefore have to ask Daniel for help. I had it easier with Maddox AI, because here the software had already made a pre-selection for me and thus the whole first step that I have to take with Halcon is omitted. After Daniel helped me with the selection of the image, I try to program it myself. However, I have to ask Daniel again and again, because the structure of the user interface is irritating for me and I especially struggle with writing my own code. In the end I only manage to do it with a lot of help from Daniel.

My conclusion: Halcon’s operation is much more complex and difficult to understand. Especially for me as a layperson, the user interface is not intuitive. In addition, you have to learn your own programming language and write your own code, which caused some difficulties, especially for me as an absolute programming layperson. With the AI-based system from Maddox AI, the operation was much easier, since I was assigned clear tasks, the software is graphically structured and therefore I actually only had to evaluate different images.

Nevertheless, using an AI-based system does not automatically mean that the user interface is easily accessible for layperson. Again, there are big differences in user-friendliness and programs that are difficult to use. So, if you want to benefit from an intuitive inspection software in your company, get in touch with us. Maddox AI, as I have experienced myself, offers a software for you that even layperson can use.

After comparing ease of use in this episode, I turn to the problem of recalibration in the next episode. In doing so, I will again compare rule-based systems and AI-based systems.

After our meeting, I set to work on the program myself. First I make myself aware of the meaning of the different windows and then decide to upload a photo to the application. But here I encounter difficulties already. I find it difficult to judge which of the taken pictures is well suited to create a test task and therefore have to ask Daniel for help. I had it easier with Maddox AI, because here the software had already made a pre-selection for me and thus the whole first step that I have to take with Halcon is omitted. After Daniel helped me with the selection of the image, I try to program it myself. However, I have to ask Daniel again and again, because the structure of the user interface is irritating for me and I especially struggle with writing my own code. In the end I only manage to do it with a lot of help from Daniel.

My conclusion: Halcon’s operation is much more complex and difficult to understand. Especially for me as a layperson, the user interface is not intuitive. In addition, you have to learn your own programming language and write your own code, which caused some difficulties, especially for me as an absolute programming layperson. With the AI-based system from Maddox AI, the operation was much easier, since I was assigned clear tasks, the software is graphically structured and therefore I actually only had to evaluate different images.

Nevertheless, using an AI-based system does not automatically mean that the user interface is easily accessible for layperson. Again, there are big differences in user-friendliness and programs that are difficult to use. So, if you want to benefit from an intuitive inspection software in your company, get in touch with us. Maddox AI, as I have experienced myself, offers a software for you that even layperson can use.

After comparing ease of use in this episode, I turn to the problem of recalibration in the next episode. In doing so, I will again compare rule-based systems and AI-based systems.